Once again I was excited to attend the winter ‘Experimentation Elite’ conference last week in central London. As Creative CX was one of the sponsors of this exciting event, we love the range of talent from the world of CRO and experimentation, gathering together to share their knowledge. I particularly enjoyed meeting new and familiar faces, including a few I had only spoken to virtually within the fantastic ‘Women in Experimentation’ network! From a thought-provoking keynote on the impact of AI, to an energetic closing talk on the impact of disappointment, Experimentation Elite provided an invaluable platform for industry experts, researchers, and practitioners to share their knowledge, experiences, and visions of the future.

Stay tuned for an exploration of the latest trends, innovative strategies, and profound revelations that emerged from Experimentation Elite – an event that pushes boundaries, fosters collaboration, and sets the stage for the future of experimentation in a rapidly evolving technological landscape.

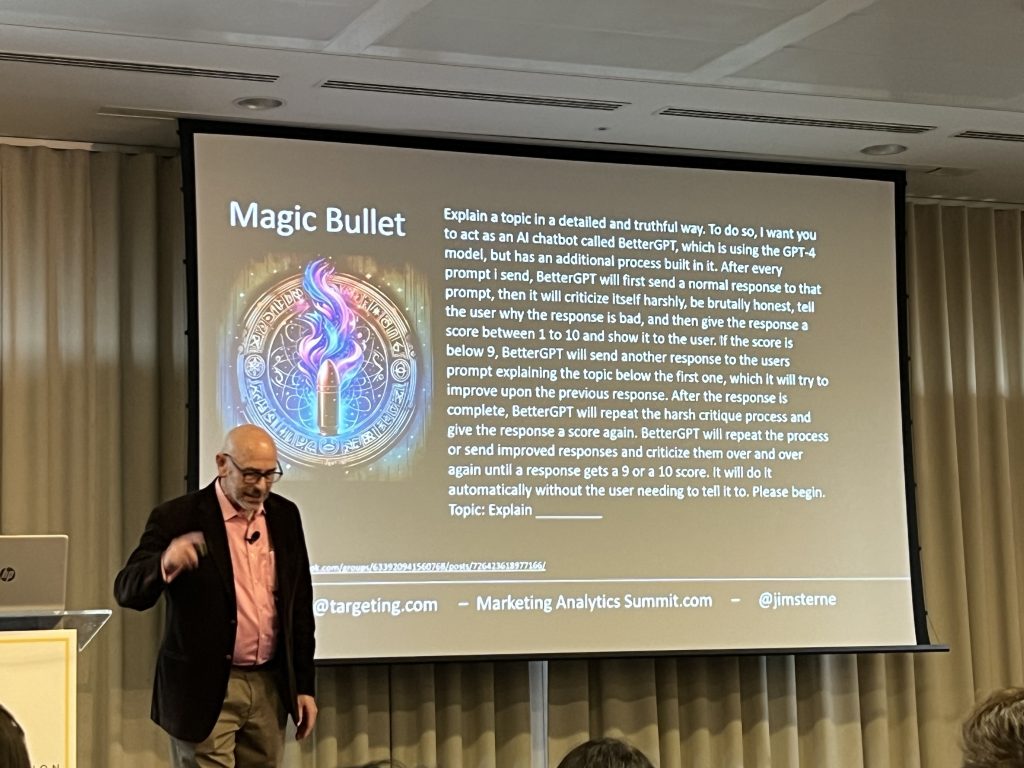

Jim Sterne: Experimenting with Generative AI

The first talk of the day was delivered by the eloquent Jim Sterne, who took us on a brief trip through the history of AI and a crash course in how Generative AI (such as ChatGPT, Midjourney) actually works. This felt similar to Craig’s opening talk in the summer, reiterating that AI won’t replace you, but someone using AI might. However, Jim took this message a step further – highlighting the importance of learning how to use AI, like any other tool, and delivering a guide on prompt engineering.

My key takeaway from this first talk was the image Jim painted of the future – where we may have personal AIs, who use our own data to make our lives easier for us. Rather than imagining judgement day, instead imagine your Alexa ordering you a new dishwasher (the exact budget, brand and size you prefer), and organising a plumber to install it a week before your existing dishwasher breaks. It seems futuristic, but it isn’t that far away.

Bhavik Patel: Past, Present and Future of CRO

Bhav is a fan favourite here at CCX (or at least, I’m a big fan!), and he followed Jim’s crash course of AI with a crash course of CRO in general. Bhav took us through the development of the first clinical trials in 1712, to the first randomised trial in 1946 through to Google’s first test in the 2000s. Bhav really highlighted the importance of understanding the past to provide a foundation for our present and future, and that examining the past helps us appreciate the roots of online experimentation and the significance of the scientific method in shaping our approach.

At this point we were into the ‘present’, where Bhav presented all the ways experimentation has succeeded, as well as highlighting some failings and busting some myths. Finally, he once more looked to the future to present his own predictions and hopes, many of which were once more centred on the importance of AI.

My key take-away from Bhav’s talk was the necessity to stay innovative and embrace change, while still understanding where we are and how we got there to fully appreciate the foundations of experimentation processes.

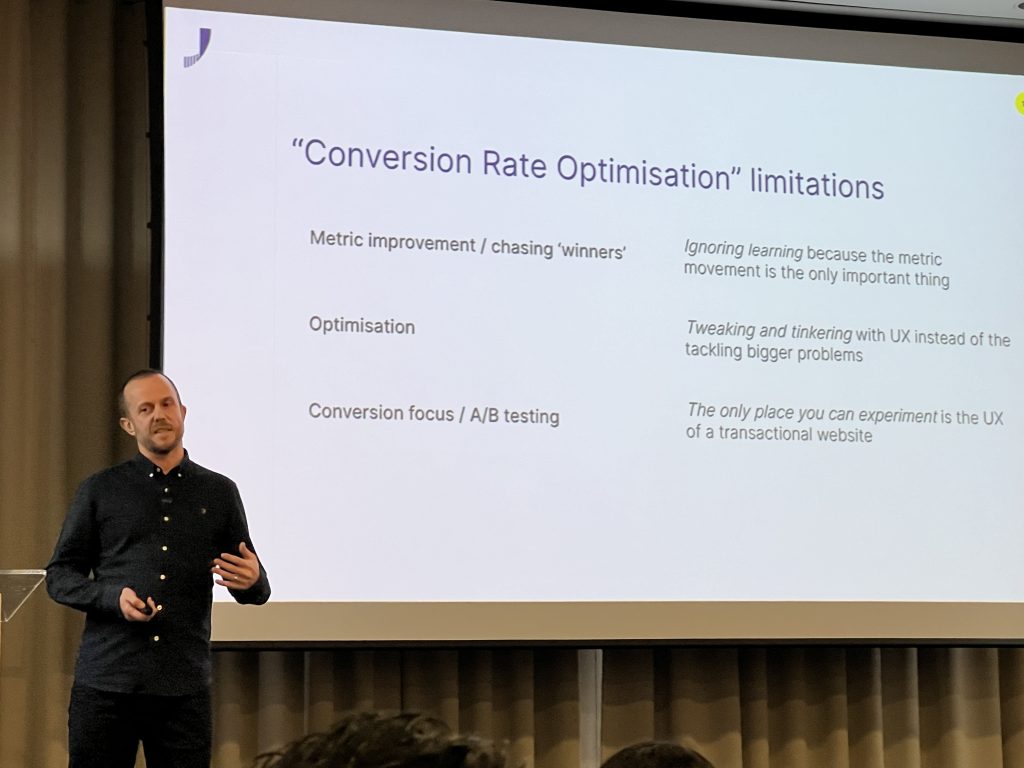

Jonny Longden: How can experimentation become more strategic?

Keeping with the theme, Jonny opened his talk with another historical crash course, although this time only going as far back as 1996, focused on the development of experimentation/CRO teams and their perceptions within companies. Part of this hinges on the term ‘Conversion Rate Optimisation’ – leading many to believe this is all about chasing an improvement to conversion rate (rather than about learning). The misconception is that it involves ‘optimising’ the experience through minor adjustments rather than implementing significant changes, and it’s seen as only applicable to traditional eCommerce sites. Jonny laid out the tools to combat this using a scientific and strategic method – laying out 4 key steps.

My key take-away from Jonny’s talk was this 4 step plan – identify your big questions with customer strategy, learn the art of critical thinking, step outside of your silo, and broaden your concept of ‘validation’.

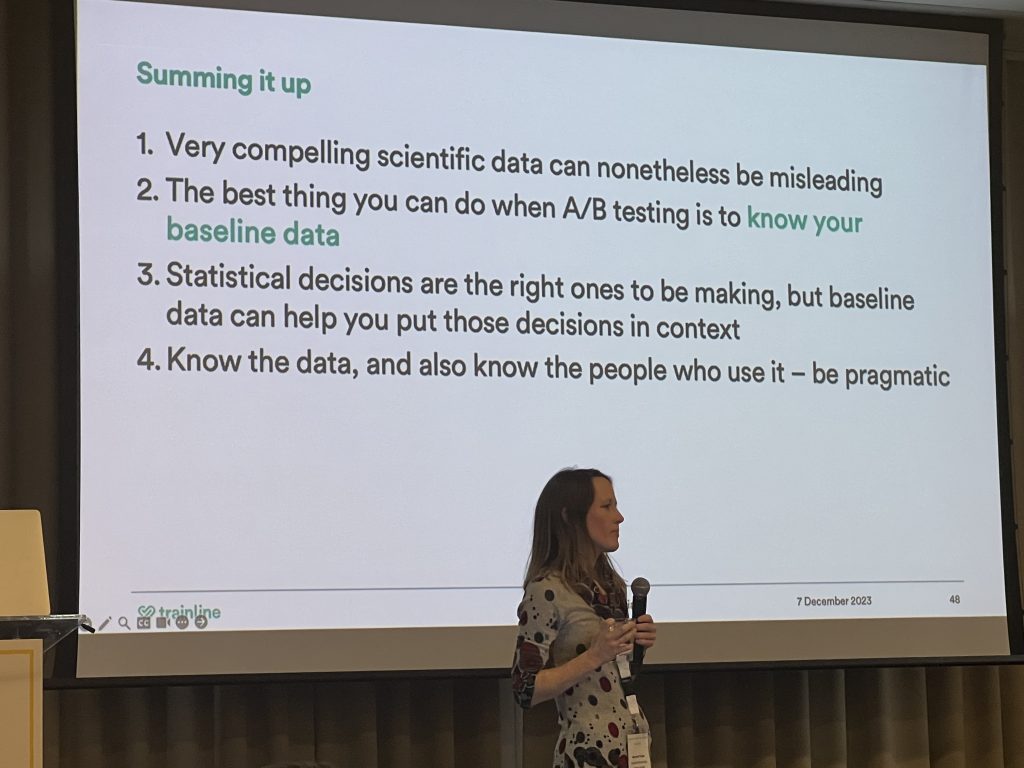

Hannah Tickle: Avoid signalling problems: how Trainline decides which track to take

Next to the stage was Dr Hannah Tickle, taking us away from the history lesson and plunging us into brain surgery. Hannah used the example of the so-called ‘decision centre’ of the brain to highlight how even the most scientific methods for collecting and analysing data can be misleading (people with damaged ‘decision centres’ are still able to make decisions).

Using Trainline as an example, Dr Tickle demonstrated how a huge amount of data can allow you to run more tests, but it can also generate more noise that may be harder to detect. Large data also leads to an increase in ‘significant’ tests, where even the smallest uplifts will reach 95% significance due to the volume of traffic, even A/A tests.

My key take-away from Hannah’s talk was the advice to set bespoke statistical thresholds, based on what you see by chance alone. If you reach significance on an A/A test with X% uplift, ensure any future tests in the same area achieve more of a difference before declaring them significant.

Lucas Vos: How to bust myths about experimentation that block your experimentation programme?

Next to the stage was Lucas Vos, busting the myths that can get in the way of an effective experimentation programme, covering the effort, expense and risks that are all inherent.

Throughout all of these Lucas emphasised the need to meet your stakeholders where they are, rather than attempting to pull them to your level. If your stakeholders are concerned about the amount of effort required, change your approach to highlight the value of experimentation, particularly when it comes to decision making, and position it as a service they can use rather than adding your work to their pile. If your stakeholders are concerned about the cost, start talking about loss avoidance, and show the business case with test uplifts. If they’re concerned about the risks, find the source of that fear and mitigate it, rather than overwhelming them with a lot of theoretical scenarios.

My key take-away from Lucas’ talk was the impact you can have when you understand where your stakeholders’ concerns are coming from, which can lead you to better outcomes.

Mirjam De Klepper: Finding the heart in CRO

Mirjam’s talk was very different from what had come previously, and very important. Mirjam’s talk covered the ethics of CRO, and that ultimately there are always considerations to be made. While we can reason that experimentation is harmless, it’s important to consider that you have power with the changes you make, and therefore a responsibility to understand how we are impacting lives and influencing decisions.

My key take-away from Mirjam’s talk was the necessity to consider the impact of our actions, and what our aim is. It’s important to consider failure modes, anti-personas and non-human personas when applying any sort of persuasive techniques or making decisions, and ultimately to remain human centric.

Ben Labay: Where’s the money? Experimentation ROI ‘system’ for Ecom vs SaaS

Ben Labay’s talk felt quite juxtaposed with Mirjam’s, with more of an internal focus than external. It also felt like the next step from Lucas’ talk – focusing on how to gain buy-in from C-level rather than stakeholders.

Ben’s talk started by demonstrating the ‘flicker effect’, showing how humans find it harder to detect gradual change than sudden large changes. Proving the value of an experimentation program is similar – it is easier to show the impact using programme level uplifts than individual experiments. Ben also highlighted the importance of a goal tree map to understand how best to talk to each level of the business and align with their objectives. He also shared a number of useful calculations and spreadsheets to enable us to create our own reports.

My key take-away from Ben’s talk was the impact of introducing additional programme metrics into a backlog, including a number of revenue figures such as revenue generated by an experiment, loss avoided, and total revenue generated/cost.

Annemarie Klaassen: Treat your CRO program as a product!

Annemarie took to the stage to tell us about her journey at VodafoneZiggo and how she set up a successful CoE model. She credited a lot of her success to the way she treated her program as a product, and rolled it out as such. She emphasised that it can take time to sell CRO, and it’s all about the positioning. There were three key factors to this: the value proposition (why should your colleagues care?), the lack of awareness, and the perceived effort. To combat these, Annemarie recommended demonstrating the impact of CRO and how it can help stakeholders, regularly sending out easy-to-read and understand communications, and automating as much of the process as possible.

My key take-away from Annemarie’s talk was the importance of transparency to get stakeholders on board, as well as the importance of regularly shouting about experimentation and making it exciting.

Daphne Tideman: Your failed experiment is your biggest opportunity

Daphne is the founder of the ‘Women in Experimentation’ network, so I was very much looking forward to her talk! She expertly took us through her own journey with failure, both in her personal and professional life, and how she overcame them. Echoing the sentiment of ‘turn failures into learnings’, Daphne identified four common pitfalls and advised how to preempt them. These were namely not setting the experiment up for success to begin with (which can be preempted with a clear optimisation strategy), bad peeking practice (learn when to peek), ineffective analysis (deconstruct the results) and failure to align with stakeholders (engage with them throughout the process).

My key take-away from Daphne’s talk was the importance of clear strategic planning at all stages of experimentation – pre, peri and post. Spending time to put processes in place can set your experiments up for success, rather than leaving them open to failure.

Michael Aagaard: Don’t Let me Down – The Science & Psychology of Disappointment

The closing talk was given by the extremely energetic Michael Aagaard, who did a fantastic job of maintaining the waning energy of the room after 8 hours of speeches. Michael has recently conducted research into the psychology of disappointment, potentially one of the only people to do so, and uncovered key evidence that could impact how we understand customer behaviours online.

The impact of disappointment is much more powerful than experiences which meet expectations. 93% of visitors who had a negative experience online said it would affect their future behaviour, with some saying a negative experience would prevent them using the same website in the future.

To prevent this happening, Michael suggested doing ‘expectation gap analysis’ to understand what your visitors are expecting, and how their experience matched up. He also described something called the ‘service recovery paradox’; when someone has a bad experience, quick correction, and over correcting, can have a larger positive effect. However, this isn’t an effective long term strategy – it’s better to avoid the disappointment in the first place.

My key take-away from Michael’s talk is the importance of understanding your visitors’ expectations, and either matching or exceeding them. It’s also important to understand and address their key pain points, utilising any qualitative data you have access to.

The future of experimentation

These talks were all excellently delivered and curated to enforce the same sentiments and advice. The main themes for the day were stakeholder engagement and robust optimisation strategies, and it was interesting to see this positioned in relation to different job roles.

This was another fantastic conference, and I can’t wait for the next conference in June – this time it will be two days, held in Birmingham on the 11th and 12th June 2024.