Validating the desirability and value of adding photos to Treatwell’s reviews using “fake-door experiments”

Invaluable early learnings into the potential of a new product feature

Background

Some customer problems cannot be investigated or solved with a typical A/B/n test. The popularity or value that comes from a new development-heavy feature is one of them.

Typically we see businesses go ahead with building these features, relying on the customer research they have gathered as evidence of value. However, we have found, more often than not, that these features may not provide the same value as initially indicated in research. This is where “fake door” experiments could provide valuable data-based insights prior to investing time and effort.

Fake door experiments, sometimes referred to as painted door experiments, are minimum viable products where we pretend to provide a product, feature, or service to our users that is not yet available. It’s a powerful method that allows us to rapidly validate an idea at a minimum cost as we are not actually developing it.

The Opportunity

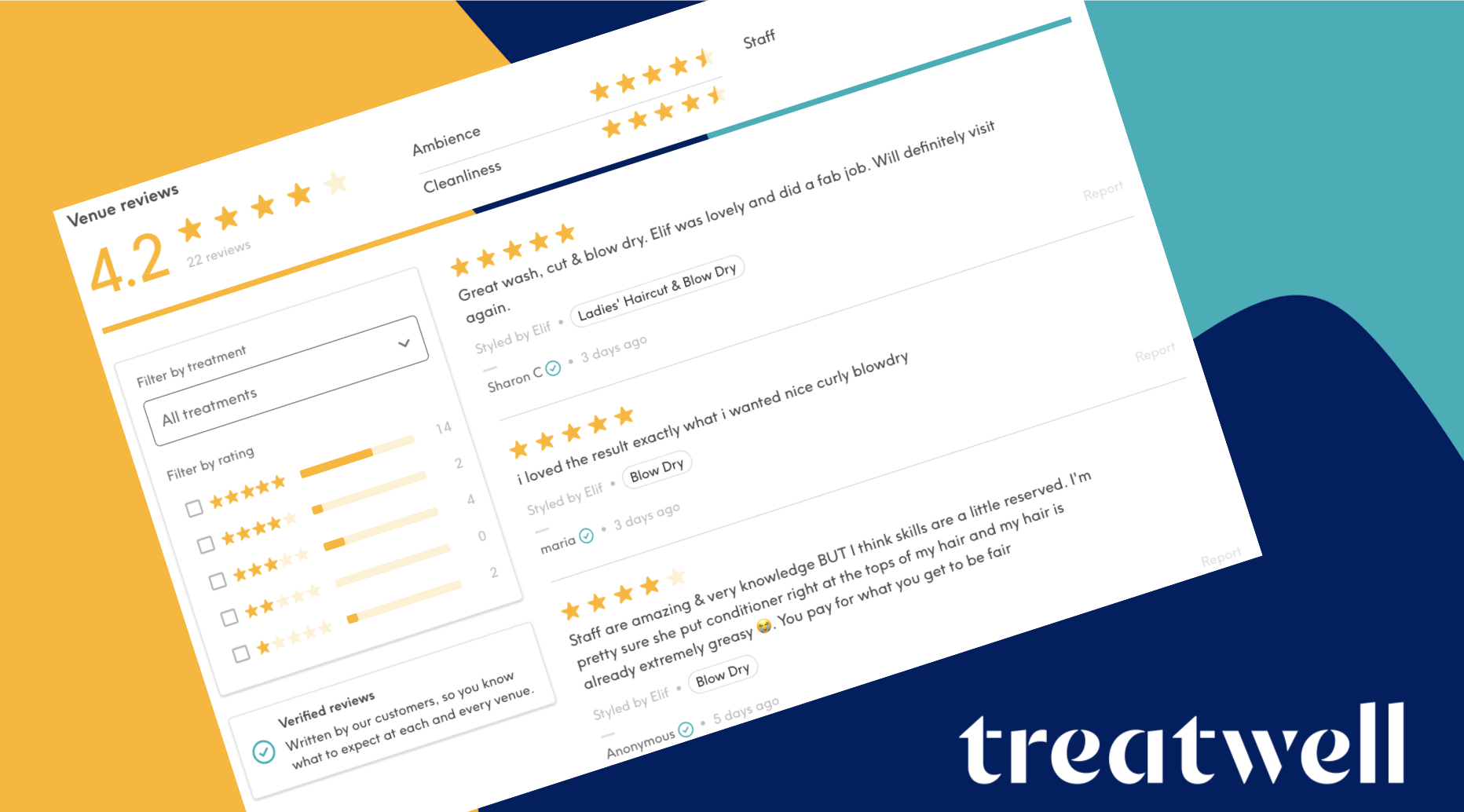

Previous experimentation carried out on Treatwell, a leading beauty salon booking site, demonstrated that customer reviews were crucial for motivating people to book particular salons. User research indicated that people wanted more from the booking experience, in particular from the reviews displayed, actively looking for photos of the salon’s work.

However, image uploading for reviews is technically difficult to build. The efforts required from an operational and legal point of view were also high. This meant we needed a business case that demonstrated the value to effort ratio was positive, providing confidence that the value added for our new and existing customers justified the large cost behind

The Solution

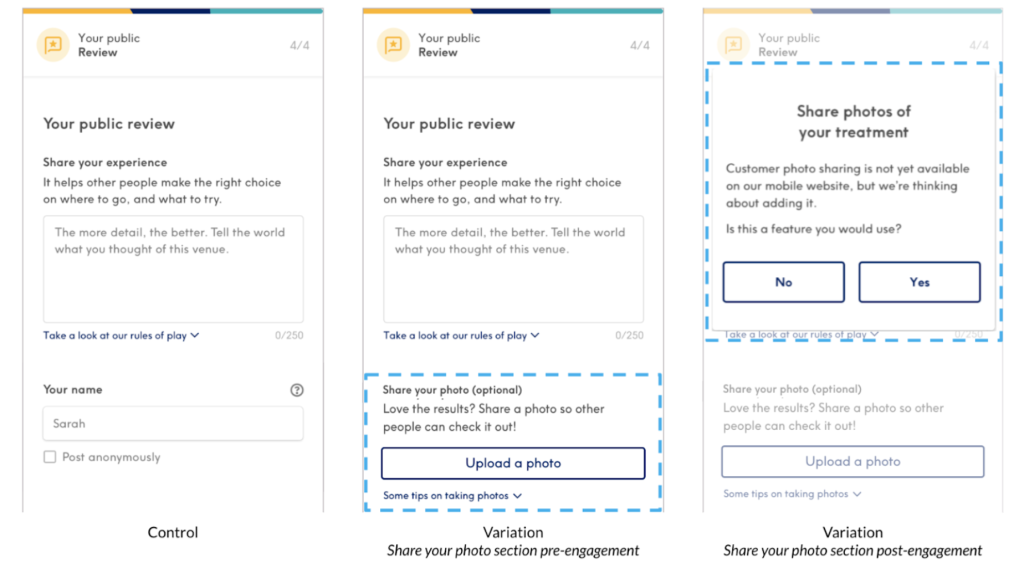

With many solutions to the above problem, one of the most adventurous ideas was focusing on whether customers would want to upload photos with their reviews. As mentioned, building this feature would be costly and risky, therefore a “fake door” A/B/n test allowed us to introduce a new photo upload feature that wasn’t yet functional.

The main objective of this “fake door” test was to understand the popularity that the image uploads would have on our existing customers, providing the confidence that this was a feature customers would actually utilise.

A “share your photos” section was added to the review funnel, which encouraged users to attach their pictures of the venue or treatment to their review. Within this section was an “upload a photo” button, which when clicked, would show a polite message informing users that the feature was planned, but not yet available. Alongside this was a quick question that asked users if they would actually use this feature, distinguishing intent from curiosity.

The Results

Engagement with the “upload a photo” button was around 3%, which seems relatively low when looked at in isolation. This number needed to be understood within a wider business context, where it quickly turned from “only 3% of users” to “300 images a week? We could do a lot with that figure!”.

When analysing these results, we found that engagement with this section was influenced by three factors; the placement of this within the journey, the device you were using and the treatment type you were reviewing.

- We found that adding this feature within the review funnel significantly decreased review submissions, indicating that either the feature distracted users from completing their review or acted as a barrier as users believed this to be a compulsory step. This led to us iterating with the placement of this section, placing it after a user had submitted their review to see if they would add post-completion. This saw the same number of engagements with the “upload a photo” button without the significant drop in submissions.

- We found the majority of photo uploads were coming from mobile devices, where it is easier to access your camera roll compared to your desktop device.

- We found that not all treatment types were warranting photo uploads. Users leaving reviews for nails had the highest opportunity to upload a photo. This was followed by users uploading for hair and face treatments, styling rather than maintenance cuts and eyelashes and brows, rather than facials. Users reviewing massages, body and hair removal had minimum to no user engagement, as one would expect.

All of these data points gathered from the “fake door” test helped us build a more solid picture of the impact the feature could have beyond just the initial usage of a button. This allowed us to make a strategic call on what we would do going forward, as well as beginning the conversation, and the inevitable experimentation to understand the “quality” versus “quantity” aspect of reviews and the impact that these photos within reviews would have on users booking a salon.